Abstract:

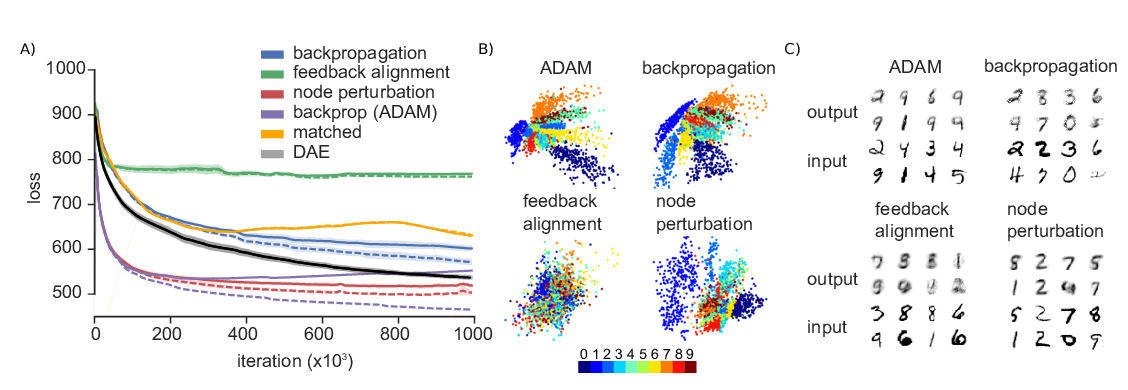

The family of feedback alignment (FA) algorithms aims to provide a more biologically motivated alternative to backpropagation (BP), by substituting the computations that are unrealistic to be implemented in physical brains.

While FA algorithms have been shown to work well in practice, there is a lack of rigorous theory proofing their learning capabilities.

Here we introduce the first feedback alignment algorithm with provable learning guarantees. In contrast to existing work, we do not require any assumption about the size or depth of the network except that it has a single output neuron, i.e., such as for binary classification tasks.

We show that our FA algorithm can deliver its theoretical promises in practice, surpassing the learning performance of existing FA methods and matching backpropagation in binary classification tasks.

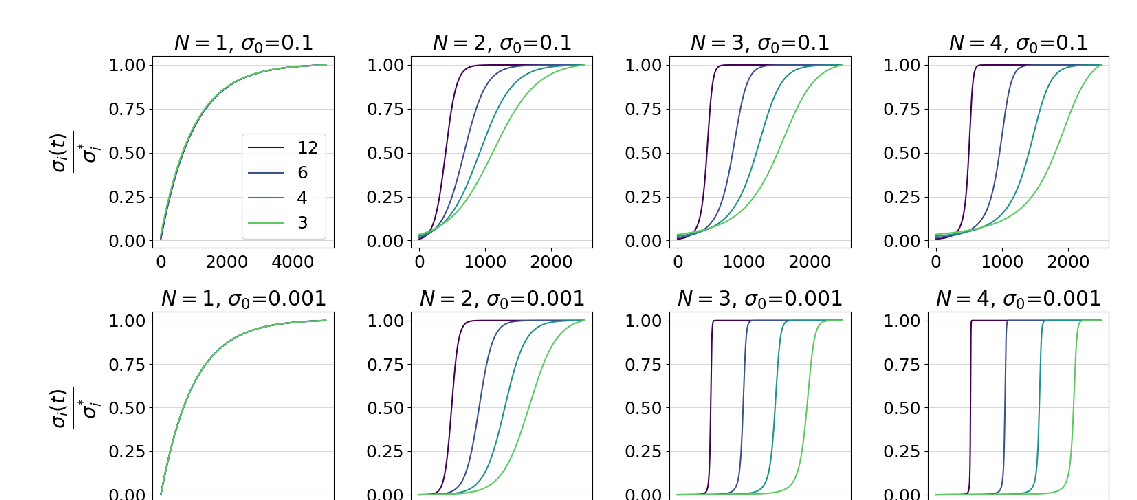

Finally, we demonstrate the limits of our FA variant when the number of output neurons grows beyond a certain quantity.