Abstract:

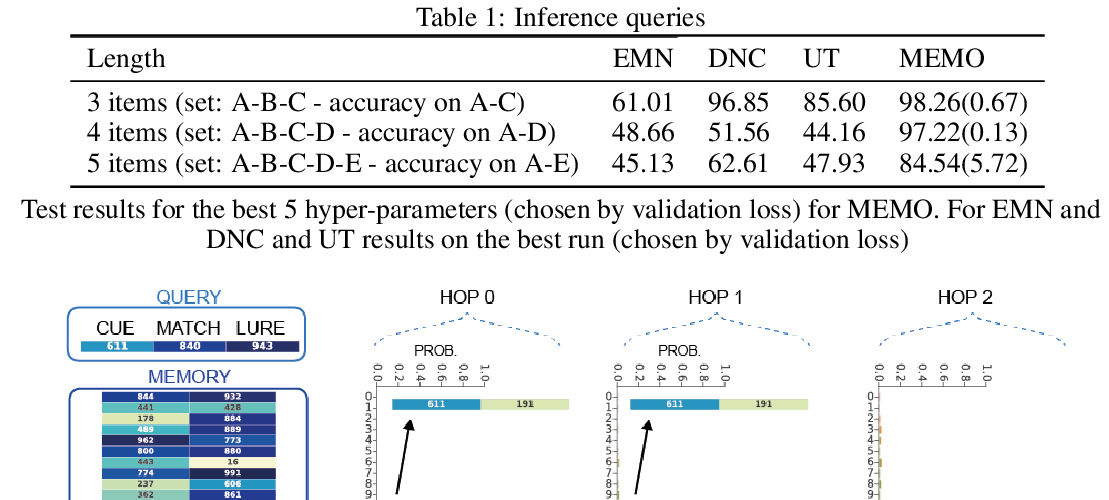

We study the problem of learning an associative memory model -- a system which is able to retrieve a remembered pattern based on its distorted or incomplete version.

Attractor networks provide a sound model of associative memory: patterns are stored as attractors of the network dynamics and associative retrieval is performed by running the dynamics starting from a query pattern until it converges to an attractor.

In such models the dynamics are often implemented as an optimization procedure that minimizes an energy function, such as in the classical Hopfield network.

In general it is difficult to derive a writing rule for a given dynamics and energy that is both compressive and fast.

Thus, most research in energy-based memory has been limited either to tractable energy models not expressive enough to handle complex high-dimensional objects such as natural images, or to models that do not offer fast writing.

We present a novel meta-learning approach to energy-based memory models (EBMM) that allows one to use an arbitrary neural architecture as an energy model and quickly store patterns in its weights.

We demonstrate experimentally that our EBMM approach can build compressed memories for synthetic and natural data, and is capable of associative retrieval that outperforms existing memory systems in terms of the reconstruction error and compression rate.