Abstract:

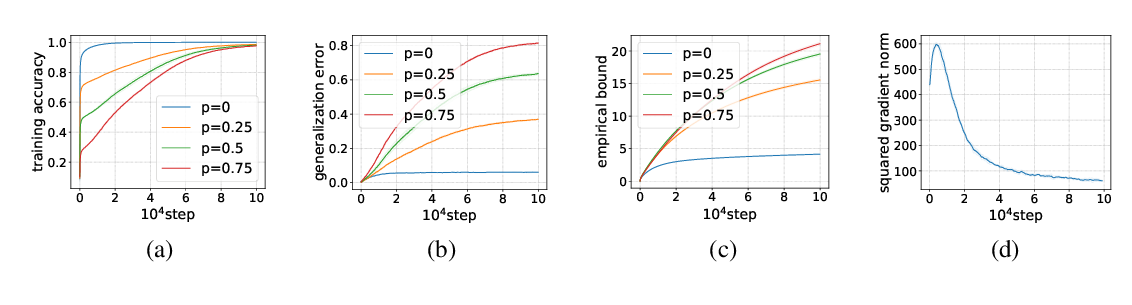

We prove bounds on the generalization error of convolutional networks.

The bounds are in terms of the training loss, the number of

parameters, the Lipschitz constant of the loss and the distance from

the weights to the initial weights. They are independent of the

number of pixels in the input, and the height and width of hidden

feature maps.

We present experiments using CIFAR-10 with varying

hyperparameters of a deep convolutional network, comparing our bounds

with practical generalization gaps.