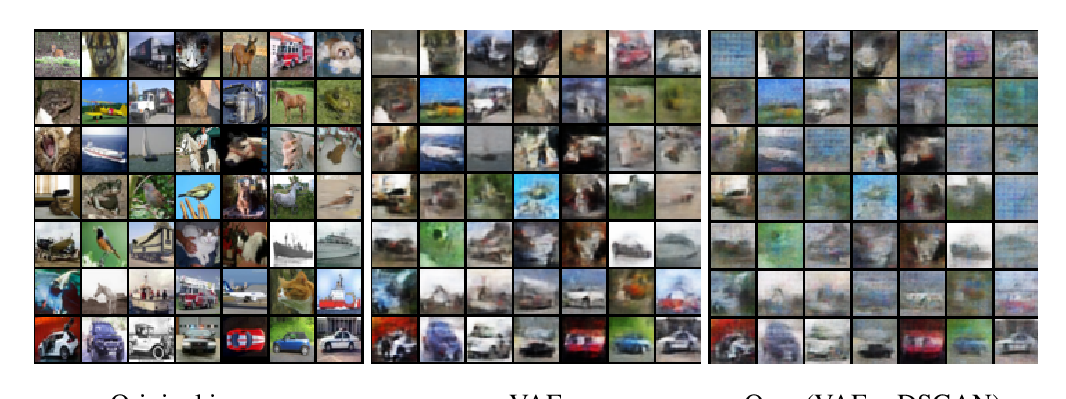

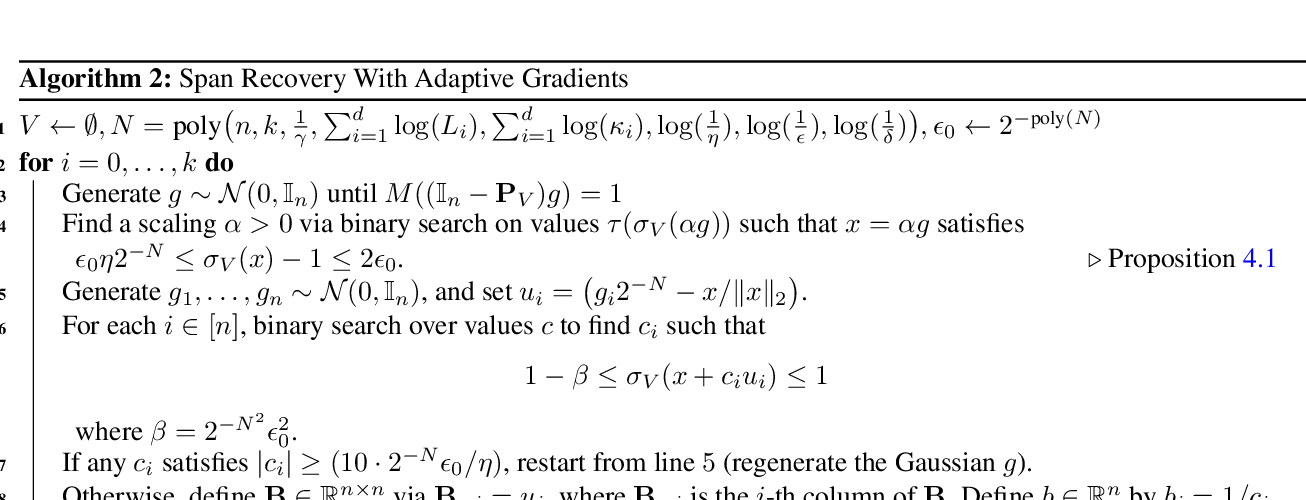

Span Recovery for Deep Neural Networks with Applications to Input Obfuscation

Rajesh Jayaram, David P. Woodruff, Qiuyi Zhang,

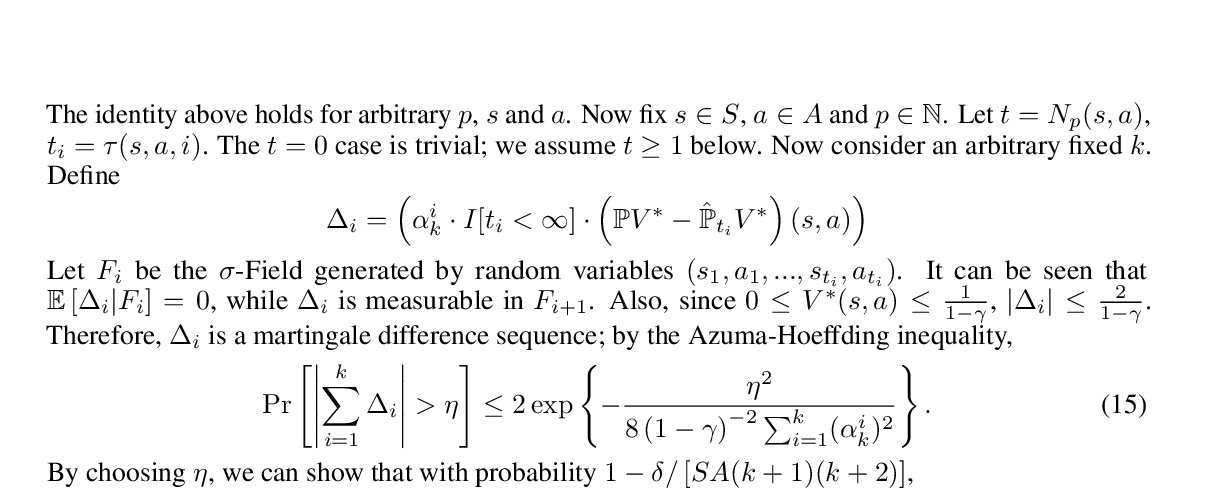

Q-learning with UCB Exploration is Sample Efficient for Infinite-Horizon MDP

Yuanhao Wang, Kefan Dong, Xiaoyu Chen, Liwei Wang,

Difference-Seeking Generative Adversarial Network--Unseen Sample Generation

Yi Lin Sung, Sung-Hsien Hsieh, Soo-Chang Pei, Chun-Shien Lu,